ORA-02019: connection description for remote database not found

TL;DR

When you try to create a materialized view on a different schema and the query selects from more than one remote object you get a misleading error ORA-02019: connection description for remote database not found.

Your options:

change the query to use one objects.

Connect as the other user and create the materialized view

Ugly workaround – create a materialized view for one of the remote objects and use it in the query.

Long version:

Lately, I was trying to create a materialized view. The problem was that the query behind the view was using a sub query. As a result, when I tried to run the CREATE MATERIALIZED VIEW command, I got the famous ORA-22818: subquery expressions not allowed here.

create MATERIALIZED VIEW u1.JOIN_EMP_DEPT_MV as

select a.employee_id,a.department_id ,(select department_name from hr.departments@orcl d where

d.department_id = a.department_id ) department_name

from hr.employees@orcl a ;

According to Oracle error codes, creating a materialized view containing a sub query is not supported

ORA-22818: subquery expressions not allowed here

22818. 00000 - "subquery expressions not allowed here"

*Cause: An attempt was made to use a subquery expression where these

are not supported.

*Action: Rewrite the statement without the subquery expression.

In order to Rewrite the statement without the subquery expression I used the plsql guru Steven Feuerstein advice and created a regular view with the complex query and tried to create a materialized on top of the view.

As a side note, this way of building a simple materialized view on top of a regular view is a good practice since it will allow you to change the materialized view without having to drop and recreate it.

The first part of the process went well and the view was created and worked as expected

create VIEW u1.JOIN_EMP_DEPT_V as

select a.employee_id,a.department_id ,

(select department_name from hr.departments@orcl d

where d.department_id = a.department_id ) department_name

from hr.employees@orcl a ;

However, once I tried to create the materialized view on the application schema using my private schema, I got the following message

conn U2/U2

create MATERIALIZED VIEW u1.JOIN_EMP_DEPT_MV as

select * from u1.JOIN_EMP_DEPT_V;

ORA-02019: connection description for remote database not found

This message is completely misleading. We know that the db link exists and we know it works. After all, the view was created using this db link.

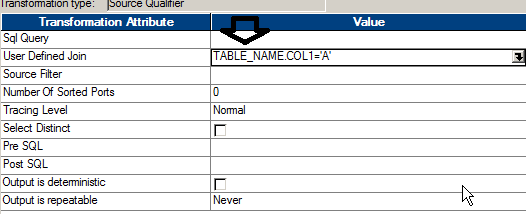

create or replace VIEW u1.JOIN_EMP_DEPT_V as

select a.employee_id,a.department_id from hr.employees@orcl a ;

One remote object – Works!

In addition, user U1 can create the the materialized view without a problem

conn U1/U1

create MATERIALIZED VIEW u1.JOIN_EMP_DEPT_MV as

select * from JOIN_EMP_DEPT_V;

also works!

Apparently, I am not the only one encountering the ORA-02019: connection description for remote database not found issue.

Creating materialized view of another user via private db link succeeded by selecting single table while failed by selecting more than one table (Doc ID 2305974.1)

Sadly, according to Oracle, this is not bug. Basically, they claim that this is the way it works and you can ask for an ENHANCEMENT REQUEST.

If creating the view with only one remote object or connecting as the other user are not an option , one ugly workaround would be to create a materialized view for one of the tables and create the join it with one remote object

create MATERIALIZED VIEW u1.JUST_DEPT_MV as

select * from hr.departments@orcl;

create or replace VIEW u1.JOIN_EMP_DEPT_V as

select a.employee_id,a.department_id ,(select department_name from u1.JUST_DEPT_MV d where d.department_id = a.department_id ) department_name

from hr.employees@orcl a ;