While running a relatively simple Informatica mapping I got the following error.

Severity: FATAL

Message Code:

Message: *********** FATAL ERROR : Caught a fatal signal or exception. ***********

Message Code:

Message: *********** FATAL ERROR : Aborting the DTM process due to fatal signal or exception. ***********

Message Code:

Message: *********** FATAL ERROR : Signal Received: SIGSEGV (11) ***********

Basically, errors like this means that something went terribly wrong and the Informatica server did not know how to handle it. In many cases you will need to contact Informatica support to help you with a solution.

Various reason can cause this kind of error:

tnsnames.ora file used by the Oracle Client installed on the PowerCenter server machine gets corrupted.

If this is the case, other workflows using the same tns entry would probably break as well. The solution is to recreate the tnsnames.ora file (or contact Oracle support).

Another cause for the SIGSEGV Fatal error crash is a problem with the DB drivers on the Informatica server. Many user report this issue with Teradata and Oracle

The above reasons would probably be system-wide and will cause problems is many workflows. In my case, only the process I was working on had this problem while all other workflows were working without a problem. So, I could assume that the problem is limited to something specific in my mapping. I looked at the log and find out that this issue happens immediately after the following

message

Message Code: PMJVM_42009

Message: [INFO] Created Java VM successfully.

This led me to the conclusion that this problem is Java related.

It is possible that the Oracle libraries/symbols are loaded first and there is a conflict of symbols between Java libraries and Oracle client libraries.

In this case, Informatica suggest to set up the following environment variables:

LD_PRELOAD = $INFA_HOME/java/jre/lib/amd64/libjava.so

LD_LIBRARAY_PATH = $INFA_HOME/java/jre/lib/amd64:$LD_LIBRARAY_PATH

The problem with setting environment variables is the fact that it is system wide change and there is always a risk of breaking something. So, changing a setting in the workflow or session level is always preferred. This way, if something breaks, the problem is contained.

While looking for a workflow level solution I came across the following article suggesting that the problem is a result of mismatch between the Linux Java and the PowerCenter version.

“This is an issue with the Oracle JDK 1.6 in combination with RHEL Linux. Java txn, and in turn the session is impacted.

The JDK has a Just In Time (JIT) compiler component that works towards optimizing Java bytecode to underlying native assembly code. Assembly code is native and is faster than Java byte code.

The crash occurs in this JIT generated assembly code, which means that native debuggers will not be able to resolve the symbols.”

There are two solutions for the problem, one is, forcing the session (DTM) to spawn Java transformation with JDK 1.7 rather than JDK 1.6. There are details in the above link but, again, this is major change with many possible implications.

The second (and simpler) option is Disabling JDK’s Just In Time compiler

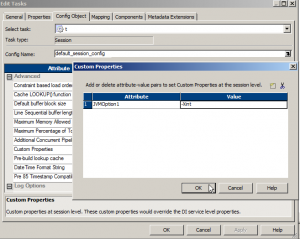

You can do this at a session level by adding the following JVM option as custom property to IS.

JVMOption1 = -Xint

In Edit task – Config Object – Custom Properties

It is important to understand that this means that there is no optimization performed on the Java transformation’s bytecode and a performance hit is expected in the Java transformation alone. Therefore, if the java transformation is performance sensitive, you might want to think about the first solution.